est·schrift

Pronunciation: ‘fes(t)-“shrift

Etymology: German, from Fest celebration + Schrift writing

Date: 1898

: a volume of writings by different authors presented as a tribute or memorial, especially to a scholar

Introduction

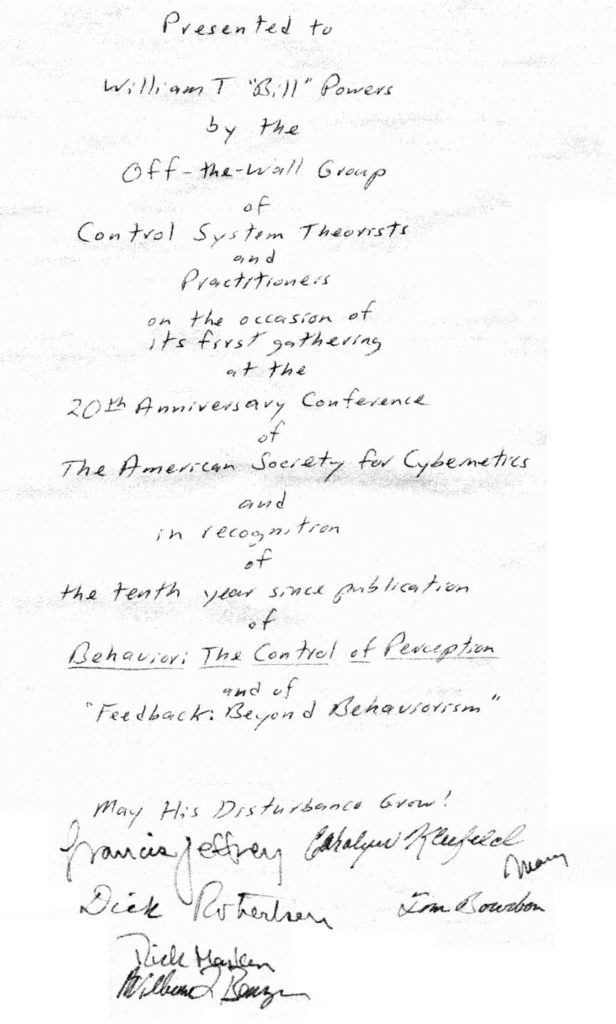

The William T. Powers Festschrift is a contribution by persons influenced by the work of William T. Powers in honor of the 30th anniversary of the publication of Behavior: The Control of Perception. The contributions range from personal tributes to thematic essays. They have come in from all over the world and were put together on various local versions of word processing programs. I have refrained from editorial intervention, so that the style and format used by the authors could be retained. There are still some inadequacies of presentation in a few of the contributions. I apologize for any inconvenience this may cause, but it was impossible for me to alleviate all of these problems at the last moment. Corrections are welcome and will be incorporated into the Festschrift for eventual final presence on the web.

Since the contributions are only a mouse click away, it is unnecessary to comment on them any further. However, I would like to offer a partof the “web site story” as my contribution. The emergence of this project began in Saint Louis at the 2000 CSG conference. Realizing the forthcoming anniversary of the publication of B:CP in 2003 and being aware of festschrifts as ways of recognizing such landmark events, I suggested the notion of a web-based festschrift forWilliam T. Powers. The suggestion met with immediate approval and was supported as energies allowed throughout the project. The organizational work, approaching contributors, getting emails out to them, doing the amateur webdesign and construction and doing all those “little” things that pave the way for a project like this, was done by myself. Fred Good, Dag Forssell, Rick Marken and, of course, Mary Powers and her daughters, Barbara and Allie, helped. Thanks to all who helped and all who contributed and who will contribute to this project in recognition of William T. Powers.

After the presentation of this webbased Festschrift, contributors arestill invited to submit additions to this project in honor of WilliamT. Powers’ contribution to science.

Lloyd Klinedinst

lloydk@klinedinst.com

Organization by Topics

- Papers Related to PCT

- Personal Reflections

- Pictures Related to William T. Powers and PCT History

- Miscellaneous

- Links

- List of Contributions, Alphabetically Ordered by Author’s Name

1. Papers Related to PCT

1.1 PCT—Yeah? So What?! By Timothy A. Carey

Living things do not respond to stimuli. Nor do their brains command their muscles to behave in certain ways. Living things control their experiences. Controlling experiences seems a simple notion yet its implications are profound. My observation has been that when this idea is explained to most people heads nod in understanding and agreement. People seem to easily notice the phenomenon of control once they know what they are looking for. While control becomes apparent to most people who take the time to notice, what remain invisible are the implications of this phenomenon. Let me see if I can describe what some of these implications might be.

Behavioral scientists who understood that living things controlled their perceptual experiences would not be interested it trying to find connections between stimuli and responses. They would become disinterested because they would know that there is no connection between a stimulus and a response. In fact, not only would they understand that the link between stimulus and response is chimeric, they would also wise up to the fact that, as far as living things are concerned, there is no such thing as either a stimulus or a response. Calling something a “stimulus” is to assert that this something is able to stimulate another something. A stimulus is only a stimulus to the extent that it produces a response in the thing it is stimulating. In the same way, calling something a response is to suggest that this something was brought about by a stimulus.

Perhaps the terms stimulus and response would linger on in the vernacular. After all, we still talk about sunsets and sunrises rather than “planetary rotational illusions” or “visual orbital effects”. When working to learn about the nature of living things, however, the activity of stimulating a living thing and measuring its responses would vanish. Rather, people who wanted to know about living things would investigate both what and how perceptual experiences are controlled.

The seemingly innocuous idea of studying the process of control rather than the stimulus/response connection would irrevocably change the activity of perhaps every behavioral scientist who clocks in for work today. Rather than scrutinizing the eye-blink response and the way it varies in relation to different puffs of air, a control scientist would be interested in what conditions of the eye are being maintained in particular states. What is the moisture level on the surface of the eye? How much variation in moisture level is tolerated before this variation is eliminated? Is a particular amount of pressure on the surface of the eye also kept constant?

Answers to new questions about what and how an eye controls would begin to shed some light on the mystery of how an eye goes about the business of seeing. Some things about what we already know would probably still be useful. In attempting to answer new kinds of questions about what an eye does, however, we would have to become a lot less sure about what we think we already know. For a time we would be less certain about that small part of the world that we thought we had pinned down. The functioning of the eye is one thing we would be less certain about once we recognized the controlling nature of things that live. But for people who have difficulty dealing with uncertainty, the bad news is that our knowledge about the eye would not be the only thing that would be affected. Everything, in fact, that we currently believe about living things would need to be reconsidered from a control perspective and almost everything would either be revised or rejected.

Seeking to understand different aspects of the phenomenon of control would of course be a fatal blow for the IV-DV research approach that currently exists. IV-DV is just a sneaky way of writing stimulus-response. The idea here is that we vary IV’s and measure the effect on DV’s. Behavioral scientists who cared anything at all about doing a good job realized long ago that there actually is no reliable connection between IV’s and DV’s. So that they could continue to think they were doing a good job—they were, after all, controlling their experiences—they began to use statistics.

Statistics are to a behavioral scientist what a top hat is to a magician. Illusory relationships are made to look real when statistics are employed. Behavioral scientists discovered that if you gathered together a whole bunch of living things and engaged them in an activity, you could assign numbers to different aspects of the activity. Statistical procedures could then be used to combine the numbers that were produced from the results of all their individual activities. Methods were invented to turn all these individual numbers into just one number. The new number that was created was meant to represent something. That special single number could then be tested to see if it was special enough.

If the number wasn’t special enough or if behavioral scientists wanted to make it seem even more important they created a bigger bunch of living things. The more living things the more important the number. Unfortunately, as the bunch of living things grows and as the impressiveness of the combined number soars, the less we know about any particular individual in the bunch. This is no way to unearth the scientific laws of living.

Other things can also be done to make numbers seem more important. Ultra-tricky statistical procedures have been created which add new bits to the numbers that are already there or even take bits away from the numbers that exist. This sometimes has the effect of making the number that represents the bunch become more important. These kinds of procedures will tell you a lot about numbers but they won’t tell you much at all about living things. Numbers don’t care what you do to them—living things do.

Control scientists wouldn’t spend time combining large bunches of living things together to find out how they behave. Control scientists wouldn’t look for IV-DV relationships. Control scientists would want to know what living things control. To find that out they would study individual living things. They would identify the controlling characteristics of the individual and then they would look for similar characteristics in other individuals. Control scientists would be hunting for whatever it is that all living things of a particular kind control. After a successful hunt, control scientists would understand a little bit more about what it means to be that kind of living thing.

Control scientists wouldn’t need to spend time learning to do tricky things with numbers. Instead they would spend their time learning to build models that work. When control scientists thought they had tripped over a good idea in their laboratory, their next job would be to build a model of the idea. If the model they built was able to control in the same way as whatever it was they were trying to understand then they would think their idea was pretty good. If the model didn’t control in the same way then they would need to do some rethinking. That’s how control science would work—playing with models, not numbers.

Some behavioral scientists are so good at statistical procedures that they call what they do modelling. This modelling is very different from the modelling that a control scientist would do. Statistical modelling uses large bunches of numbers to create important connections between different sets of numbers. In other words, it is still trying to explain the IV-DV link. Sometimes in a sophisticated kind of way the IV is called a predictor variable and the DV is called a criterion variable. No matter. They are still describing the same kind of relationship. The toys in their playpen are still stimuli and responses.

In many ways it would be unfair to leave the discussion of statistics at this point. The purpose of this foray into the world of statistical methods was not to malign the legitimacy of these procedures. It is not the techniques that are at fault but their application that is awry. When statistics are used to determine the relative importance of a particular number no reliable conclusions can be made about the behavior of any particular individual in the group from which the number came. Statistical methods will provide information about general characteristics of a group but they will yield absolutely zilch in terms of elucidating the principles and laws that govern the nature of living things. Statistics are not the problem. The stories created from their results are.

Stimuli and responses can be disguised in other ways as well. One sly move that occurred a few years ago was to extract the stimulus from the environment and to pop it into the head of a living creature. Some optimistic people called this tricky maneuver a revolution—a cognitive revolution. When a stimulus is inside a living thing rather than outside it is called a cognition—or a belief, a thought, a brain command, an attitude—the labels are many but a stimulus by any other name …

Because control scientists would use models to check their ideas they wouldn’t be very concerned with the name they attached to any particular bit. For a control scientist what would be important would be how the bit functioned in the model not the particular word sound that was used to name it. Control scientists then wouldn’t consider that they had improved their theory when they produced better sounding words. Nor would they think their model was better when the diagram looked nicer or when double headed arrows connected more boxes in a greater variety of ways. They would consider they had improved their theory when their model controlled like the creature they were modelling. We don’t need new word sounds; we need to figure out what’s going on.

Obviously some things are going on and some things aren’t going on. If you’re trying to solve the puzzle of how something actually works, some answers will be correct and some will be incorrect. Behavioral scientists, however, are very reluctant to say that any idea is wrong. With a wave of the hand and a puff of smoke, statistics can be used to show that almost any idea is interesting and useful. Behavioral scientists came up with the convenient tool of “operationalising”. Operationalising just means saying that something you don’t understand very clearly can be what you say it is. Depression can be operationalized by counting up someone’s scores to answers on a test. The number they get on the test then represents how much depression there is. At least that’s the way the story goes.

Another very handy strategy to use with operationalising is “forgetting”. Behavioral scientists tend to forget that the number they have obtained is, at best, a representation of depression. They say they are measuring depression, not scores on a test. They say that depression has decreased when the numbers get smaller and worsened if the numbers get bigger. They use statistics to do things to the numbers and then tell us what happened to the depression. They don’t seem to know that people are controlling even when they are putting circles around numbers on an official looking piece of paper.

Control scientists would not be interested in operationalising. They would not be interested in what something was called. They would be interested in how something functioned. By being interested in the way something functioned they would have to accept that some ideas would be right and some would be wrong. Calling an idea “wrong” is not a nice thing to do when you’re mixing with behavioral scientists. With crafty methods like operationalising and statisticalising any idea can be made to seem even a little bit right. Behavioral scientists need long sleeves. The wizardry of statistics requires much sleight of hand.

Control scientists would roll their sleeves up and spend time investigating the phenomenon of control. Control scientists would discard an idea if it was wrong. It would be OK to call an idea wrong with a control scientist. Control scientists wouldn’t spend time making wrong ideas seem a little bit right. They would want to know what it was that was wrong and how the model needed to be changed so that it would be right. “Right” to a control scientist would mean accurate. If the model accurately simulated what was being observed then it would be regarded as the closest thing to “right” at the moment. If the model wasn’t accurate then something about it would be regarded as being wrong and it would need to be changed.

Wrongness and rightness seem to be uncomfortable notions for many psychologists and behavioral scientists. In the world of psychology anything goes. “Do what you wanna do, be what you wanna be, yeah …” is the tune that is hummed. The arena of psychotherapy is a startling demonstration. Someone who could be bothered to count them once estimated that there were over 400 different types of psychotherapy. This is inclusivity and acceptance gone berserk. As one method fails to help all people all the time it is tinkered with and adapted. Finally it is given a new name and an inspiring practitioner runs new workshops to teach new techniques that hold greater promise than previous methods. Some people think they understand what is happening and remain loyal dispensers of one method or another. Other people acknowledge that they don’t know what’s going on and call themselves “eclectic”.

It is mind-numbingly obvious that not all of the different methods can be accurate in their explanations of the problem. If 20 different people were all treated for something called depression with 20 different types of psychotherapy and they all got better, then the 20 different methods used can undoubtedly not be the reason the people got better. Much of what is happening in psychotherapy cannot account for why people get better. A great number of the methods in psychotherapy are at best unnecessary and at worse detrimental.

Control scientists would only want to do what was necessary. Control scientists who wanted to help people get better would not accept any explanatory story that came along. Control scientists would base their helping methods on the most accurate explanation of control that they could find. This of course would limit the kinds of things that control scientists could do. When methods are based on theoretical principles then some methods will be consistent with the principles and some won’t. The benefit of this limitation however is an accurate understanding of people’s problems. From this understanding comes an ability to assist people efficiently and meaningfully where assistance is required and also to determine when assistance is not necessary.

If control was the only show in town much of the same type of activity would still occur. People would still have problems that they would need help with. Problems would need to be assessed, diagnosed, and treated. Where problems existed, however, they would be seen as problems with control. A something that is designed to control will only experience problems when its ability to control is interfered with in some way. When people with problems put themselves in front of a control scientist what would be assessed would be the people’s abilities to control different things. Perhaps the range and limits of their control abilities would be explored. Diagnoses would be statements about their abilities to control. Where treatment was required treatment would focus on helping people improve their abilities to control.

Control scientists would understand that what people control are their perceptions. That is, what they see and hear and feel and taste and smell and touch—what they experience. People don’t control their behavior. If people are going to control their experiences they have to let their behavior change in whatever way is necessary so that their experiences continue to be the way they want. They have to constantly let the muscle tensions in their legs change so that they can remain upright on the deck of a rolling boat. They have to let their racquet go to the right place so that the ball will land in the middle and sail back over the net. They have to be prepared to turn the taps any which way until the temperature of the bath feels right. They need to squeeze the bottle differently so that the right amount of detergent oozes into the sink.

So control scientists wouldn’t have to operationalize imaginary ideas like intelligence and personality. They wouldn’t assess people by asking them to produce numbers that supposedly represent different bits of intelligence or different types of personality. Nor would they use space-age machinery to make different bits of the brain light up in response to different stimuli. They would know that different colors on images of a brain would tell them nothing about difficulties in controlling.

Control scientists wouldn’t diagnose people based on reports of behaviors that occur too much or too little and they wouldn’t treat people by trying to change their behavior. Social skills programs, assertiveness programs, and anger management programs are some of the programs that wouldn’t be necessary. The use of drugs to increase or decrease behavior would also not be necessary.

We’re not sure just yet exactly what would be necessary. Many people seem to find a way to solve their problems when they are told to do different things with their behavior. But since people don’t control their behavior it can’t be anything about the particular behavioral program that helped them solve their problem. Nor could it be anything about a drug changing their behavior that helped them solve their problem. Somehow, when people are given ways of changing their behavior, some people manage to figure out how to control their experiences more effectively. Control scientists would prefer to use science rather than serendipity to help people solve their problems. Using serendipity is an approach that anyone is entitled to take. It is perhaps not unreasonable to expect, however, that when people practice serendipitously that they would say that’s what they are doing rather than pretending they are practicing scientifically. Serendipity and science are not the same and one should not be called the other.

So let’s see if I can bring together all of what I am trying to say. The implications of control are profound. They reverberate through every area of investigation of living things. At a cellular level control scientists would be interested in how cells keep certain chemical concentrates at particular levels. Even before cells get off the ground we could benefit by thinking about genetic instructions as part of the process of control. In this way genes wouldn’t be seen to be commanding anything to occur other than particular states of the DNA strand. Everything else would be seen as a side effect of this control.

But back to cells … By studying how cells control we might come to understand the normal functioning of a cell. Once we understand how a cell functions and what it actually is that a cell does when it’s doing normal celly things—we might also begin to comprehend what it is that goes wrong when problems occur. Perhaps for the first time we would begin to understand really scary diseases like cancer. Considering something like cancer from the perspective of cellular control would enable us to finally figure what’s going on when cancer occurs. Once we get to know cancer for what it is we’ll be in a mighty position to beat the rascal at it’s own game. Until that time we’ll continue to use treatments as battering rams and rely on the strategy of hope.

At the level of cellular systems we’d strike it rich by considering problems from a control perspective. We might understand what happens to a pancreas when it isn’t able to control effectively anymore and someone develops diabetes. It’s also likely that we’d begin to understand what it is about control processes that were collapsing when someone develops a degenerative disorder like multiple sclerosis or Huntington’s disease. Once we understood what was going on here in terms of disruptions to control processes we’d be in a position to begin to design interventions that might arrest the degeneration and support the functioning of organs on strike.

Moving up to individual creatures, I’ve already spent some time talking about how we might be able to understand the functioning of a creature better by considering their activity from the control angle. Problems would be understood as problems with control. Recovery from these problems would be recognized as the regaining of control in important areas. Tests and examinations would only assess a person’s control capabilities. Behavioral programs would vanish and people would be assisted to control more effectively not to increase or decrease certain behaviors—which they couldn’t do without controlling, even if they wanted to.

Once we get away from individual creatures and start thinking about what happens when creatures gather together, the control perspective is still vital. If psychologists were control scientists they might be able to make themselves indispensable to governments and social planners by really understanding what is going on. As control scientists they would understand that individuals control their perceptions and they would know that it would be counterproductive to try to control an individual’s actions. They would know that, in the long run, it is useless to try to make people behave as we want them to without considering control as it seems from where they’re standing. Not bombs nor armies nor trade sanctions nor threats nor snubs at dinner parties will change another’s behavior unless, serendipitously, these particular strategies happen to affect what the other is controlling. If control was understood then methods of making others behave as we want would be seen as problematic and perhaps even dangerous. Important people might finally be able to behave like grown ups rather than children in a playground squabbling over a favorite toy. By considering the controlling nature of each other and themselves they could reach amazing decisions with glorious consequences for the humanity of now and later on.

Control is important all over the place. In every possible way the earnest study of control will provide clarity regarding our most troublesome diseases, sicknesses, conflicts, and battles. That’s some “so what?”. But wait! There’s more … By assigning the phenomenon of control to it’s rightful place in the natural scheme of things, the life sciences will become a legitimate science for the first time. Currently division in the sciences of life is the order of the day. Division is commonplace as different strands of the one area battle for supremacy. In psychology it is important to know whether you are a clinical psychologist or a neuropsychologist or an educational psychologist or a research psychologist, and still there are more. The division is all the more quizzical considering that there is still no consensual body of knowledge to be divisive about. People in the field of psychology say that psychology is the study of behavior and yet they are unable to define what behavior actually is. This squabbling over positions on the club ladder is more reminiscent of rival craftspeople in a cottage industry than it is of a scientific endeavor.

The study of control would provide a unifying thread from which a genuine scientific discipline could grow. The bar has just been raised. A standard now exists by which people can begin to authentically understand the functioning of living things. Once we understand how living things actually go about the business of living we will be well placed to eliminate forever some of the most pervasive problems that currently exist. In fact, some problems might not be problems at all once we learn to ask the kinds of questions that would be relevant from a control paradigm. That’s not bad for one little phenomenon and the theory that explains it.

Thank you Mr. Powers.

1.2 What kind of science has Bill Powers wrought? by Dag Forssell

For the Festschrift. . .

How do I love thee. . . Let me count the ways! . .

Bill, you have brought us a new way of thinking about ourselves and life, a way that I find compelling based on reading your lucid writings and using what I have learned from you as I live, experience and reflect on life. Here is one feeble attempt to share with others the magnificence and significance of your achievement. I was still formulating this paper as we celebrated the fiftieth anniversary of the birth of PCT.

Descriptive versus Generative Scientific Theories

Love, Dag

1.3 The Spark! A Tribute to William T. Powers. By Perry Good

1.4 Language: The Control of Perception. By Joel B. Judd

| Joel B. Judd Adams State College 208 Edgemont Boulevard Alamosa, CO 81102 719-587-7805 jbjudd@adams.edu | Submitted for consideration in the Festschrift for William T. Powers |

It may only be a slight exaggeration to claim that the linguistic Holy Grail consists of explaining the connections between brain functioning and language. In other words, a theory of human communication is an extension of our efforts to explain brain and behavior. If this is the case our beliefs about the brain should have direct implications for our characterization of language, and vice versa. With apologies for co-opting the title of Powers’ seminal book, I suggest the most promising theory addressing the brain-language connection is Hierarchical Perceptual Control Theory (HPCT).

A recent summary of sociolinguistics (Coulmas, 2001) also frames the real question for linguistics:

How is it that language can fulfill the function of communication despite variation?

Powers’ insight was to emphasize the role of behavior in perceptual control. Likewise, the key starting point for linguistics is to observe that language is just as purposeful as other behaviors. One might argue it is the purposeful behavior, for it is quintessentially human. Language forms the basis for most of our complex interactions with family, friends, colleagues and others. It allows for the possibility of resolving disputes and conflicts without resorting to violence. Although Coulmas’ statement refers primarily to the way languages change over time, it also defines the HPCT perspective. From phonemes to syntax, all aspects of individual linguistic behavior—whether written, signed, or spoken—constantly vary, yet we manage in most instances to communicate sufficiently well with those around us to accomplish our day-to-day purposes.

As with other behaviors, the fact that our linguistic behavior varies is not a new discovery. Especially after 1900, the means to document individual linguistic variability increased along with measurement technology. For example, Pillsbury and Meader (1928) conducted a small study among colleagues on the musculature involved in speech production and noticed that phonemes constantly vary. Their conclusion: “It may be seriously questioned whether one ever makes the same group of speech movements twice in a lifetime. If one does, the fact is to be attributed to chance rather than law” (p. 218).

Again in parallel with behavior generally, linguistic variability at the phonemic level doesn’t usually impact our ability to communicate. However, “higher” level variability in morphology and syntax may be crucial, even life-threatening (What did you call me?!). Linguistics, though, has done little beyond describing (or in some cases ignoring) such variability. In other words, most research has relied on attempts to tie observable—or measurable—language behavior to either environmental contexts or presumed cognitive faculties. This has been the source of fundamental problems for much of linguistic research, both from a behaviorist and cognitivist standpoint. As Stanley Sapon pointed out over 30 years ago, “…if we take as our only data the formal properties of an utterance, then the only predictions we can make are predictions of form, not of substance” (Sapon, 1971).

Sapon is alluding to the research pitfall of relying on descriptions of observable behavior in order to predict future behavior (or explain it). For example, attempts to predict exactly which member of the class of words called ‘noun’ will finish a sentence like “What I really like to eat in Summer is ________” have failed. As a result, some researchers have labeled such prediction “trivial.” However, Sapon finds it disingenuous for scientists—and in particular linguists—to

…describe [as trivial] the specific predictions which ordinary, unenlightened people are wont to consider crucial. I, for one, look with compassion upon the craftsman who does not know how to produce estimable work and settles instead for esteeming the kind of work he can do. (p. 77)

What HPCT offers is a recast of the important questions about individual linguistic behavior, how it might develop and function. If there is a hierarchy to perception in general, then the same principles should apply to language as well. If behavior is the control of perception, then linguistic behavior is the control of perception as well.

A Perceptual Control Hierarchy for Language

The following hierarchy is after Powers (1973, 1989) and Judd (1992).

Applicable linguistic concepts are assoc iated with the appropriate level.

| Level # | Perceptual Level | Type of Perceptual Control | Linguistic Equivalents | |

| SUBJECTIVEREALITY | 11 | SYSTEM | Coherent grouping of principles (‘citizen’; ‘religious’; ‘family’) | One’s language “identity”; code-switching in bilinguals; language variation (Coulmas, 2001); socio-cultural aspects |

| 10 | PRINCIPLE | “Meta-awareness” (thoughts about thoughts); usefulness of programs; guiding ideas (‘honesty’; ‘politeness’) | Pragmatics and usage; language proficiency or aptitude; “prototypes” (e.g., Competition Model) | |

| 9 | PROGRAM | “If-then” decisions from lower levels (perceptual, not behavioral) | Self correction using explicit rules: 3rd-person singular -s, ‘i’ before ‘e’; Krashen’s Monitor (Krashen, 1985) | |

| 8 | SEQUENCE | Perceived order of events (‘beginning’ à ‘end’) | Syntactic ordering of lexical items; description [ordering] of visual episodes (Tomlin, 1987) | |

| 7 | CATEGORY | Grouping, naming, etc. of experiences | Naming (objects, concepts, parts of speech); semantics | |

| 6 | RELATIONSHIP | Connections among events (causation) | Ordering of “arguments” (e.g., “slots”, MacWhinney, 1987) | |

| PHYSICALREALITY | 5 | EVENT | Wholeness of an activity; a “familiar pattern in time” (symphony) | Lexemes (words); idiomatic phrases |

| 4 | TRANSITION | Change over time (a 5th to major 7th) | Intonation; stress-timed vs. syllable-timed languages | |

| 3 | CONFIGURATION | Unitary, recognizable arrangements (musical chords) | Letters or characters; syllables; phonemes (though see Kaye, 1989) | |

| 2 | SENSATION | Sound quality (pitch); alignment; edge, contrast | N/A | |

| 1 | INTENSITY | Loudness; brightness; pressure [1st order systems-only direct contact with outside world] | N/A |

The preceding chart outlines some of the more self-evident connections between proposed hierarchical levels of perception and their linguistic equivalents. If nothing else it should be clear that HPCT paints a picture of language that goes beyond the traditional phonology/morphology/syntax/pragmatics breakdown. It also offers a structural basis for some kind of modularity (though probably not the innate modularism of Fodor), as Powers has argued:

. . . the division into levels and the subdivision of levels into independent control systems means that there is modularity at all levels: each level consists of general-purpose control systems of a given type, available for an infinite variety of uses by whatever systems you want to add at the next level. This makes sense in design terms, and in terms of development, evolutionary or within one lifetime. If you want to build a self-organizing robot, make the system modular; that is, hierarchical. (Powers, CSGnet, November 8, 1991)

The remainder of this discussion will offer a few examples that seem to evidence a good fit between HPCT and language.

Our point of departure is the grouping of perceptual levels into “subjective” and “physical” reality. Not only does this distinction make sense in general but particularly for language and language development. There is growing evidence for similarities in the development of “lower-level” perceptions across languages. The kinds of abilities referred to include sensitivity to the same kinds of temporal and spatial relationships defined by the configuration, transition, and event levels of perception (e.g., Petitto, 2000).

As for the levels included in subjective reality, HPCT offers insights into language learning, linguistic variation, and other sociolinguistic and pragmatic questions such as: Why do men and women use language differently [gender differences]? Does our language shape the way we think, or does the way we think shape our language [linguistic relativism]? How does language serve to mark membership and identity [social class]? Why might some people have a “knack” for learning language while others struggle [aptitude]? What is the most effective way to learn another language [pedagogy]?

In the area of second language acquisition and learning, HPCT may answer, or offer more satisfying explanations for, questionable distinctions and characterizations such as whether there is a difference between “learning” and “acquisition,” or between “instrumental” and “integrative” motivation; if there really are “compound” and “coordinate” bilinguals; or what “fossilization” really means.

These questions are of course tied to the fact that as perception becomes more complex—as we move “up” the hierarchy—it also becomes more idiosyncratic and more subjective. Many are the cortical stimulation studies which localize the same perceptions involved in somatic and propio-receptive behaviors across people (toe movement, vocalization, temperature sensation), few if any are the ones which find higher levels of perception (words, numerical calculation) in the same places across people (e.g., Ojemann and Whitaker, 1978 in the case of bilinguals). As a result, HPCT implies a different research perspective for much of linguistics—one that takes a “specimen” approach (Runkel, 1990) rather than one which continues to rely on recording and tallying behavioral snapshots.

All things considered, there doesn’t seem to be any good reason to propose a separate hierarchy for language. While language is a kind of perceptual experience, perhaps the most important kind for humans as social beings, it is certainly not the only or even always the most effective type of experience. At both extremes of the perceptual hierarchy there are what might be called “a-linguistic” perceptual levels. At the initial levels, first-order systems for language perception are not any different than those for any other perception. Intensities are intensities, whether they will be eventually be perceived as “language” makes no difference. At the other extreme, the fact that we have difficulty putting into words concepts such as ‘love’ or defining terms such as ‘patriotism’ fits nicely with the idea that language is a means to an end. With it we can act to control our perceptions, but ultimately it is not the only way we perceive ourselves or the world around us.

Development and Foundation of Language

Any discussion of language acquisition includes some discussion of philosophy as well. This arises from the tendency of linguists (and applied linguists, and teachers) to speak of the ‘mind,’ while biologists, neurologists and others speak of the ‘brain.’ As a result, some have postulated linguistic constructs (e.g., ‘tense’ or “parameters”) that may have little connection to neurophysiological reality.

There is in consequence a kind of dualism in language studies. Jacobs (1988), in his discussion of language acquisition, points out, “[D]ualistic thought on such matters is unjustified: physiological states and mental states of the brain, incomprehensible as both may be, are one and the same . . . all learning involves anatomical/physiological alteration of neural substrate” (p. 306). From a philosophical perspective, Bunge (1986) speaks of this same dualism when he says that “. . . the spinning of speculative hypotheses concerning immaterial ‘mental structures’ has taken the place of serious theorizing and experimenting on the linguistic abilities and disabilities of living brains” (p. 229), [emphasis added].

In support of a unitary approach to language study (that is, one which avoids theorizing about mental structures that violate known neurological principles), some have begun to see principles of perceptual control in language. Phonology again provides an example. In HPCT the brain begins to distinguish printed or spoken language from other perceptions at the configuration level. The quality of the perception doesn’t matter as much as recognizing that it is “language” and not some other kind of visual or aural perception. As with, say, a musical chord, phonemes can be recognized whether a high, squeaky voice or a deep booming one produces them. So while the traditional linguistic definition of phonemes as “basic units of speech” seems to be appropriate, there is still something missing.

Perhaps this is why some have challenged traditional conceptualizations. Kaye (1989) has said, “The phoneme is dead” (p. 154). His claim stems from consideration of questions such as: how does one determine what a phoneme is? Feature-based definitions become impractical when one considers how phonemes appear in spoken language (recall Pillsbury and Meader’s observations). In short, many phoneticians are adopting approaches to phonology that deal with principles of phonological elements and their combinatorial possibilities and variations (Kaye, 1989, p. 164). Such developments would support Powers’s description of configuration perceptions.

Viewing phonology as part of a hierarchy of perceptual control can account for another problematic phonological entity, diphthongs. While a diphthong may at first glance appear to require perception of transition (and perhaps developmentally children initially perceive them that way), it is categorized as phoneme, at least in English (Mackay, 1987). This is because its sound contrasts meaningfully with other sounds. English-speaking adults are generally completely unaware that the [ai] vowel sound in a word like ‘high’ is a diphthong since it is perceived as a single phone.

Insights into other aspects of early language development also corroborate implications of HPCT. Infants begin to perceive key phonemic distinctions within a matter of weeks after being born (Molfese, 1977; Molfese et al., 1983), and children in a monolingual environment settle on their language’s repertoire of sounds before a year has passed (Kuhl, Williams, Lacerda, Stevens & Lindblom, 1991). Interestingly, though perhaps not surprisingly, Mack (1989) showed that children raised in a bilingual environment develop a “middle ground” for their production of similar phonemes in their two languages, thus simplifying the neurological load as well as satisfying reference levels—perhaps the PCT equivalent of killing two birds with one phone!

Any discussion of development also raises the question of critical periods: is there one or more such periods for human language? Strictly speaking, a biological critical period by definition requires that an organism have certain experiences within a particular temporal window or else key perceptions or behaviors never emerge (e.g., birdsong). This is the strong form of the hypothesis. Because such a clear-cut case for human language has never been documented (and it would be slightly unethical to artificially create one), weaker versions of this hypothesis—“sensitive” periods—are proposed for language (Snow, 1987).

Part of the reason there is no all-or-nothing evidence no doubt stems from the fact that human language is complex, and becomes even more so if viewed in light of HPCT. A true critical period for “language” would involve virtually the entire brain! An alternative would be to propose multiple critical or sensitive periods: one for configuration-related perceptual development, another for transition-related perceptual development, and so on. While again there is no definitive evidence for even this kind of perspective, there is some evidence of the need for early interaction with certain fundamental components of language.

One of the most obvious results of someone learning a language after childhood (non-native or “L2” learning) is an accent. Enough formal and anecdotal evidence has accumulated over the years to claim that the likelihood of having an accent in a L2 increases rapidly after about age five, and those individuals who learn a language after puberty almost never sound like “native” speakers. Why is this the case? One explanation could be that given the early timeframe for developing fundamental phonological perception and production, those who attempt to alter such perceptions later in life face the daunting task of modifying systems that have become automatized and subsumed into higher-level perceptions to the point where it is extremely difficult to access them again. Furthermore, if the more mature (older) individual’s communicative needs (i.e., higher-level references) are met even with an accent, sounding like a native speaker may not be high priority. Indeed, some interesting work has shown that certain accents are considered prestigious and may in fact be desirable!

Language and Subjective Perceptions

What of higher-level, or subjective, linguistic perceptions? HPCT offers just as provocative possibilities. Some of Sapon’s concerns have born fruit as linguists and psychologists struggle to provide more than a descriptive account of language learning yet one that avoids the triviality he criticizes.

Probably the main issue confronted by researchers is the logical problem of language acquisition. This is the problem of overgeneralization, or the fact that children seem immune to [adult] attempts to correct their grammatical mistakes [1] . Most well known linguists have promoted a search for some sort of innate constraint(s) on learning that allow a child to recover from overgeneralizing. There is however at least one well-known alternative to the search for a “black box” and that is a model which fits nicely with PCT principles: the Competition Model (MacWhinney, 1987, 2001). In contrast to the apparent either/or description of linguistic choices one finds in much research, close examination of language corpus reveal that children take a weighted approach to linguistic decisions. That is, the acquisition of articulatory skills, vocabulary, semantic relationships, even grammatical roles are not neatly packaged, discrete choice mechanisms—one does not select his or her language components from environmental or genetic multiple choice databanks. Instead, linguistic behaviors result from the interplay of various contextual and neurological factors, or in PCT terms, reference levels and disturbances to them.

In fact, these abilities seem to fall more in line with “fuzzy logic” descriptions of decision-making. A good example would be the development of linguistic “prototypes.” Although the fact that we “learn” vocabulary and parts of speech gives the impression of finiteness to our linguistic knowledge, in reality that knowledge is fluid and variable. The semantics for objects we drink out of, or for concepts such as ‘team,’ change as we gain relevant experiences. In the same way, our [implicit] understanding of language grows and changes through our experiences. These experiences help us develop prototypical examples and categories for grammatical relationships and other types of linguistic knowledge needed for effective communication. They also form the basis for our decisions regarding such things as word choice (categories) or lexical ordering (sequence).

Such a system would be more in line with our understanding of brain and neural functioning, and posits a view of language that reflects its functional nature (Bates & MacWhinney, 1989). In fact, the implications of an HPCT perspective on language fits well with MacWhinney’s (1999) suggestion that the nature-nurture interaction be replaced with “emergentism”; that is, replacing models that stipulate “specific hard-wired neural circuitry” with “structures [that] emerge from the interaction of [biological and environmental] processes.

Another but by no means final contribution of HPCT to understanding language involves the provocative nature of the “highest” levels of perception and their relationship with language and learning. As already mentioned, a Perceptual Control Hierarchy for Language suggests that at both the lowest and highest levels our perceptions of the world diverge from mostly language-based perceptions. It is important to realize that we can create a world of words that have little connection to personal (or “real world”) experience. This danger was wonderfully described by Powers (1973):

When that happens [failing to test symbol-manipulation against experience], word-manipulation carries them out over an empty abyss. Words lead to other words, but all links with experience are left behind. One can easily find himself chasing what may prove to be a ghost; what is the “real” meaning of intelligence or concept or vicarious meditation or quark? (p. 166)

Or in the case of language “aptitude” or “proficiency” and so on? We need to be able to move beyond “word-manipulation” if we are to completely understand the connection between language and behavior. HPCT offers perceptual control at a more overarching level than program or sequence. These higher levels offer explanations for how influential our view of self is in our eventual attainment of language abilities. The field of Second Language Acquisition (SLA) is a good source of tantalizing evidence.

One popular notion in SLA is that of “fossilization”—the point(s) at which learners appear to cease progressing in their development of second language skills (Selinker, 1972). As one might suspect, closer examination of individual subjects provides glimpses into higher-level perceptual control. For example, Selinker (1972) cites a thesis by Kenneth Coulter that examined two Russian speakers learning English. After investigating their failure to continue developing English language skills, Coulter concluded that some “strategy” lets learners know when they have “…enough of the L2 in order to communicate. And they stop learning” (p. 217). Another SLA researcher provided Selinker with personal commentary on learning strategies, suggesting that such strategies “…evolve whenever the learner realizes, either unconsciously or subconsciously, that he has no linguistic competence with regard to some aspect of the L2” (p. 219).

Along these same lines, more recent work has focused on the strategies used by second language learners. An interesting pair of students (Spanish-speakers learning English) observed by Abraham and Vann (1987) showed marked differences in the amount of time each employed key strategies (e.g., paraphrasing for understanding, repeating corrections) and how often they used such strategies. The authors’ interpretation of their observations is revealing:

Gerardo appeared to take a broad view [of language]: his flexibility, variety of strategies, and concern with correctness suggest a belief that language learning requires attention to both function and form…In contrast, Pedro’s view of second language learning was relatively limited. He appeared to think of language primarily as a set of words and seemed confident that if he could learn enough of them, he could somehow string them together to communicate. The exact way they should be combined (and, indeed, the actual forms the words should take) was relatively unimportant. Acting in accordance with this view, Pedro had adopted certain positive strategies that enabled him to be successful in unsophisticated oral communication…(p. 95, emphasis added)

The italicized words suggest higher-level perceptual control that extends down through and influences many aspects of language ability. It turned out that upon more detailed conversation with the two students Gerardo had aspirations of completing college and accepted the fact that effective, academic use of English was important to attaining this goal. Pedro, on the other hand, had a narrow view of what he needed to know and was mostly interested in talking to girls on the beach. Such revelations would make them excellent candidates for a version of “The Test,” though that is a topic outside the scope of this discussion.

Finally, Tucker (1991) reported on adult immigrants to the U.S. and their progress in learning English. One woman had emigrated to New York when she was in high school. Her English writing was understandably poor—it lacked the expected elements of organization and grammar. These faults persisted through school and two attempts at college. However, in her third try at college suddenly this woman’s writing improved markedly. In talking at length with her, Tucker found that since her last try at college she had divorced, gained insights into her own identity and abilities, and had developed professional goals for herself. As with the two students above, it appears that with a marked change in her definition of concepts such as “independent” and “businesswoman,” this woman found that she could effect significant change in what had appeared to be static or “fossilized” language skills.

The fact that such evidence fits well with HPCT encourages further investigation along these lines. Who knows but what much of the tedious details involved in language learning would actually change if we simply understood better how learners ultimately see themselves? And wouldn’t learning itself change when seen mainly as a question of whether learners perceive a discrepancy between where they “are” and where they “want to be”?

This Big Picture view of language no doubt reflects a highly influential level of perceptual processing, one which deserves more attention. As with other kinds of purposeful behavior, its effects have been noted for some time now. Witness this observation, again from Pillsbury and Meader (1928):

When beginning to write, each stroke needs attention; when beginning to sew or crochet, each stitch; but with practice the vague intention to write a letter on a certain subject may be all that is necessary to take one to the typewriter in the other room and complete the writing. (p. 169, emphasis added)

Later they offer an interesting summation of communicative purpose, “We are primarily interested in the effect we desire to produce upon the listener and so are usually not attentive to the processes by which we produce the effect” (p. 255). Pillsbury and Meader are not alone; Dunkel (1948) concludes a chapter on speaking by suggesting:

… most of the time, “one thing leads to another” in speech and we end up by having said what we wanted to say. We may have “purpose,” “volition,” “will,” “motive,” or what not. Whatever this guiding force may be, we know little about it though it is the ultimate dictator of the whole process of speech. (p. 60, emphasis added)

The examples could go on, but the few given suggest a fruitful role for linguistic research from the perspective of HPCT. The intent here was to point out some ways in which the principles of control theory, applied to language through a hierarchy of perceptual control, might lead to answers for key questions regarding language and language learning. Certainly we are often skeptical when one theory looks to be an answer for everything. But until something better comes along, HPCT promises a wealth of insights into the how and why of human language.

Works Cited

Abraham, R., & Vann, R. (1987). Strategies of two learners: A case study. In A. Wendin and J. Rubin (Eds.) Learner strategies in language learning (pp. 85-102). Englewood Cliffs, NJ: Prentice Hall.

Bates, E. & MacWhinney, B. (1989). Competition, variation and language learning. In B. MacWhinney and E. Bates (Eds.) The crosslinguistic study of sentence processing. New York: Cambridge University Press.

Bunge, M. (1986). A philosopher looks at the current debate on language acquisition. In I. Gopnick & M. Gopnick, (Eds.) From models to modules (pp. 229-239). Norwood, NJ: Ablex Publishing Company.

Coulmas, F. (2001). Sociolinguistics. In M. Arnoff & J. Ross-Miller (Eds.) The Handbook of Linguistics (pp. 563-581).

Dunkel, H. (1948). Second Language Learning. Boston: Ginn.

Jacobs, B. (1988). Neurobiological differentiation of primary and secondary language acquisition. Studies in Second Language Acquisition, 10, 303-337.

Judd, J. (1992). Second Language Acquisition as the Control of Non-primary Linguistic Perception: A Critique of Research and Theory. Unpublished doctoral dissertation, Urbana, IL.

Kaye, J. (1989). Phonology. Hillsdale, NJ: Lawrence Erlbaum.

Kuhl, P., Williams, K., Lacerda, F., Stevens, K., & Lindblom, B. (1991). Linguistic experience alters phonetic perception in infants by 6 months of age. Science, 255, 606-608.

Mack, M. (1989). Consonant and vowel perception and production: Early English-French bilinguals and English monolinguals. Perception and Psychophysics, 46(2), 187-200.

Mackay, I. (1987). Phonetics (2nd ed.). Boston: Little, Brown and Co.

MacWhinney, B. (1987). The Competition Model. In B. MacWhinney (Ed.). Mechanisms of language acquisition (pp. 249-308). Hillsdale, NJ: Erlbaum.

MacWhinney, B. (1999). The Emergence of Language (preface). Mahwah, NJ: Lawrence Erlbaum.

MacWhinney, B. (2001). First Language Acquisition. In M. Arnoff & J. Ross-Miller (Eds.). The Handbook of Linguistics (pp. 466-487).

Molfese, D. (1977). The ontogeny of cerebral asymmetry in man: Auditory evoked potentials to linguistic and non-linguistic stimuli. In J. Desmedt (Ed.). Progress in Clinical Neurophysiology, Vol. 3. Basel: Karger.

Molfese, V., Molfese, D., & Parsons, C. (1983). Hemispheric processing of phonological information. In N. Segalowitz (Ed.). Language function and brain organization. NY: Academic Press.

Ojemann, G. & Whitaker, H. (1978). The bilingual brain. Archives of Neurology, 35, 409-412.

Pettito, L. (2000). On the biological foundations of human language. In K. Emmorey and H. Lane (Eds.). The signs of language revisited: An anthology in honor of Ursula Bellugi and Edward Klima. Mahway, NJ: Lawrence Erlbaum.

Pillsbury, W. & Meader, C. (1928). The psychology of language. NY: D. Appleton and Co.

Powers, W.T. (1973). Behavior: The control of perception. Chicago: Aldine.

Powers, W.T. (1989). Living control systems. Gravel Switch, KY: Control Systems Group.

Powers, W. T. (1991). CSGnet, November 8, 1991

Runkel, P. (1990). Casting nets and testing specimens. NY: Praeger.

Sapon, S. (1971). On defining a response: A crucial problem in the analysis of verbal behavior. In P. Pimsleur & T. Quinn (Eds.). The psychology of second language learning (pp. 75-86). London: Cambridge University Press.

Selinker, L. (1972). Interlanguage. IRAL, 10, 209-231.

Snow, C. (1987). Relevance of the notion of a critical period to language acquisition. In M Bornstein (Ed.) Sensitive periods in development: Interdisciplinary perspectives (pp. 183-209). Hillsdale, NJ: Erlbaum.

Tucker, A. (1991). Decoding ESL. NY: McGraw Hill.

1.5 Looking Back Over The Next Fifty Years of PCT. By Richard S. Marken

Looking Back Over The Next Fifty Years of PCT

Richard S. Marken

The RAND Corporation

The year 2003 is the 30th anniversary of the publication of William T. Powers’ Behavior: The control of perception (B: CP), the first book to describe the theory of behavior that has come to be known as Perceptual Control Theory or PCT. It is also, as stated in the request for contributions to this volume, the 50th anniversary of Powers' "initial steps in the research that has led to PCT". I might add that it is also the 25th anniversary of my own involvement with PCT, which began in earnest in 1978. So now seems like a nice time to take stock of the state of PCT. And we are doing this with this well deserved Festschrift in honor of William T. Powers. I would like to contribute to this Festschrift by looking forward rather than backward. I have done my share of reminiscing about the past history of PCT, so far as I am familiar with it. I have lamented, in private and in print, the failure of PCT to attract the interest of behavioral scientists over the last 30 years, since the publication of B: CP made the PCT perspective readily accessible to the behavioral science community. What I would rather do now is look back on the future of PCT by taking an imaginary look at what I think the next 50 years of PCT will have been like.

Looking back over the next fifty years I see that PCT has become the dominant perspective in the behavioral sciences, having replaced behaviorism, cognitive science and evolutionism. I see this because to see anything else would be foolish. If PCT has not become dominant then this essay, and the Festschrift for which it was composed, will have been completely forgotten. So what do the behavioral sciences look like now that they are based on PCT? Perhaps what is most obvious to this visitor from 50 years in the past is the almost complete absence of statistical analysis in behavioral research. Research aimed at testing theories of individual behavior is now based on control models of individuals rather than statistical models of aggregates. Researchers no longer report statistical significance but real significance, in terms of how well the behavior of the model matches the behavior they have observed.

Modeling is now the basis of behavioral science research. Modeling tools are available which make it easy for the researcher to quickly build a model of the behaving system that includes an accurate model of the physical environment in which the system’s behavior is produced. These modeling tools take advantage of the ever-increasing power of digital technology to produce real-time digital simulations of dynamic interactions between system and environment. Behavioral research, like physics and chemistry, is now a science based on modeling rather than a guessing game based on statistical significance testing.

Behavioral science is based on modeling because behavioral research methods are now based on testing for controlled variables (Marken, 1997). Behavioral scientists now understand that the apparent randomness of behavior was an illusion created by ignoring the variables that organisms control. What behavioral scientists had called "responses" are now understood to be actions that protect controlled variables from disturbances. Disturbances correspond to what behavioral scientists had called "stimuli". When many disturbances affect the state of a controlled variable, actions will appear to be randomly related to any one of those disturbances (stimuli). PCT has moved the focus of behavioral science from the randomly-noticed stimulus-response relationships that were the subject of statistical studies of behavior to the consistently controlled perceptions that are now the centerpiece of models of behavior (Marken, 2001).

Research in all areas of behavioral science is now organized around testing for controlled variables. Behavioral scientists no longer ask, “What is the cause of the organism’s behavior?” They now ask, “What perceptual variable(s), if controlled by the organism, would lead me to see the organism behaving in this way?” This emphasis on testing for controlled perceptual variables has led to a new style of research in which the subjects of behavior studies are allowed to have better control over variables in their environment. The style of research which was aimed at measuring an organism’s “responses” to the presentation of discrete “stimuli” has been replaced by research aimed at measuring an organism’s ability to control perceptual variables that are being influenced by smooth variations in environmental variables that are disturbances to these variables.

Ingenious new experimental techniques have been developed that allow researchers to observe the state of hypothetical controlled variables while the variables are being disturbed. These techniques are similar to those developed long ago in the study of the perceptions controlled by baseball outfielders when they catch a fly ball. For example, McBeath, et al (1995) used a video camera attached to a fielder’s shoulder to observe the state of optical variables, such as the optical trajectory and acceleration of the ball, that the fielder might be controlling while catching fly balls. These early efforts were often limited by the failure of the researchers to record disturbances, such as the actual trajectory of the ball, to these hypothetical controlled variables. But these studies were important precursors to current PCT-based research inasmuch as they focused the attention of researchers on the importance of monitoring the state of possible controlled variables.

Research aimed at the identification of the perceptual variables controlled by humans and other organisms has been going on for several decades and the catalog of controlled variables continues to grow. Much of the research effort these days is aimed at classifying controlled variables and studying the relationship between systems controlling different types of perceptual variables. Much of this work supports the basic framework of a hierarchy of perceptual control systems that was originally proposed by Powers (1973, 1998). In particular, the research results are consistent with Powers’ brilliant suggestion, based at the time only on subjective experience, that the hierarchy of control is organized around a limited number of different classes of perceptual variables. Although these perceptual classes are not precisely the same as those suggested by Powers it is now clear that there are a limited number of different kinds of perceptual variable. The research is also consistent with Powers’ suggestion that lower level classes of perceptual variables are used as the means of controlling higher level classes of perceptual variables. It is a testament to the scientific depth of Powers’ work that this hierarchical relationship between perceptual classes was suggested well before there was any significant objective data to support it.

Progress in research and modeling has gone hand in hand ever since scientists started looking at behavior through PCT glasses (Marken, 2002). This is because research and modeling are inextricably interrelated in the PCT approach to behavior. Progress in research depends on the development of models that explain the research results. Similarly, progress in the development of models of behavior depends on research aimed at testing the predictions of these models. This tight interrelationship between research and modeling has resulted in the development of models that produce behavior that is remarkably realistic. Some early models based on PCT (Powers, 1999; Marken 2001) hinted at the kind of realism that could be produced by models based on PCT. Current models benefit from many years of research into the variables that organisms actually control while carrying out various behaviors. They also benefit from the realism that can now be achieved in terms of simulation of the physical environment in which behavior actually occurs.

The science of PCT has not only increased our understanding of behavior, it has also contributed to developments in many areas of practical endeavor. For example, PCT-based models of behavior have paved the way for the development of robots that can perform very complex and dangerous tasks in highly unpredictable, disturbance-prone environments. PCT models of economic behavior have made it possible for policy experts to design economic policies that preserve the best results of capitalism, in terms of the production of wealth, while eliminating its worst wrongs, such as the maintenance of egregious wealth inequality. World population is stabilizing near zero population growth, poverty has now been largely eliminated and sustainable, prosperous no-growth economies are now a feature of nearly all world societies. The new economic model has resulted in the development of economic systems that depend more on reuse of existing resources than depletion of natural resources so that environmental pollution has been reduced to very low levels.

PCT has also become part of the popular understanding of "how people work". This means that people in general now have a better understanding of how to deal with each other on an everyday basis. In particular, people are better able to deal with the inevitable conflicts that arise between themselves and others. People now understand conflicts to be the result of conflicting goals rather than conflicting actions. They also understand that the solution to conflict does not lie in pushing harder against it. When they find themselves in conflict, people are now more apt to look at themselves and ask, "What do I really want?" rather than look at their adversary and ask, "How can I get them to change?" The prevalence of the PCT has not turned the world into utopia but it has reduced the level of violence in the world considerably since violence is now understood be the cause of rather than the solution to interpersonal (and international) conflict.

Looking back over the next 50 years I see that perhaps the greatest legacy of PCT is a change in the tone of the conversation regarding the nature of human nature. The argument between liberals who believed that all human ills were caused by society and conservatives who believed that all human ills were the result of freely made bad choices has become more nuanced. PCT shows that the difference between liberals and conservatives was simply a difference in the part of the control loop at which one focused their attention. The liberals saw disturbance resistance as evidence of social control of behavior while conservatives saw the existence of a higher level goal as evidence of free choice. The liberal/conservative argument has largely disappeared with the realization that both points of view were correct. We can reduce social ills by reducing social disturbances, such as poverty, so that people can control more effectively. But we can also reduce social ills by freely choosing goals, such as moderation and kindness, that reduce conflict by reducing the degree to which we, ourselves, are social disturbances to others.

Thirty years before the beginning of these next 50 years, William T. Powers' introduced an exciting and revolutionary new view of behavior to the scientific establishment of the day. The new view was that behavior is the control of perception. Powers proposed this view at a time when the prevailing view was that behavior is controlled by perception. Thus, when Powers' introduced his new view of behavior it was rarely understood, often ignored and sometimes angrily rejected. Now the idea that behavior is the control of perception is taken for granted. This Festschrift is a long overdue celebration of the work and person of William T. Powers', who first presented the perceptual control view of behavior to a skeptical and often hostile audience. References

Marken, R. S. (1997) The dancer and the dance: Methods in the study of living control systems, Psychological Methods, 2 (4), 436-446

Marken, R. S. (2001) Controlled variables: Psychology as the center fielder views it, American Journal of Psychology, 114, 259-281

Marken, R. S. (2002) Looking at behavior through control theory glasses, Review of General Psychology, 6, 260–270

McBeath, M. K., Shaffer, D. M, and Kaiser, M. K. (1995) How baseball outfielders determine where to run to catch fly balls, Science, 268, 569-573.

Powers, W. T. (1973). Behavior: The control of perception. Hawthorne, NY: Aldine DeGruyter.

Powers, W. T. (1998). Making sense of behavior. New Canaan, CT: Benchmark.

Powers, W. T. (1999) A model of kinesthetically and visually controlled arm movement, International Journal of Human-Computer Studies, 50 (6), 463-581

1.6 Late at night contemplating Erving Goffman as a Perceptual Control Theorist. By Dan E. Miller

Late at night contemplating Erving Goffman

as a Perceptual Control Theorist [1]

Dan E. Miller

University of Dayton

Late one winter evening several years ago I was rereading Frame Analysis by Erving Goffman when I realized that his theoretical approach was akin to that of Powers’ Perceptual Control Theory. Was Goffman a closeted perceptual control theorist? Were these similarities merely coincidental? But, as I began to identify his ideas as being based in the principles of Perceptual Control Theory, they made increasing sense to me. Had Goffman read William Powers’ book, Behavior: The Control of Perception? Did a connection exist between the two? And so, this journey began.

BACKGROUND

Symbolic Interactionists have mostly escaped the obsession with behaviorism shared by many proponents of Perceptual Control Theory. The reason for this is relatively straight forward; symbolic interactionism is based on a withering critique of behaviorism (Mead 1934). Indeed, an elementary cybernetic theory has been in existence for over a century in the social sciences. [2] For example, an early cybernetic explanation is apparent in Dewey’s (1896) critique of the reflex arc in psychology in which he argued that the neural circuitry of the reflex arc had been misinterpreted. This misinterpretation resulted in the notion of a stimulus that was separate from its response. In fact, as Dewey noted, the underlying physiology indicated a unity and continuity of coordinated action. Separate, temporally contingent, sequential events were not consistently identifiable. Rather, he noted that humans act purposively in order to solve existential problems. In doing so they must continually adjust their behavior with regard to the desired outcome in order to successfully complete the act. Dewey’s argument calls for circular (or cybernetic) logic rather than the cause – effect model of behaviorism (Shibutani 1968).

Mead’s (1934; 1938) extension of Dewey’s ideas served as the basis of a theoretical position that was later called “symbolic interactionism.” For Mead, mind and self are self-regulating processes arising through our actions in the natural and social world. Implicit in the development of his ideas is a feedback loop with a comparison process and an objective (a desired future state) – all of which he included in the process of “the act.” To Mead “the act” was what the organism was doing at the time – purposive behavior with a desired outcome. The act was all encompassing, from its impulse to culmination. The act, then, involved both perception and action in a circular process. That is, one’s actions must be carried out in a way so that the response continually acts back on the organism, who selectively perceives those stimuli that enable the continuation of the act to its culmination (Mead 1938). For Mead individuals continually adjusted their behavior as a result of an implied comparison between the present circumstances and the desired future state. Mead’s theory of the social act is an established and universally accepted principle among interactionists. It is the foundation upon which symbolic interactionism [3] was developed and his ideas form the basis of how interactionists think about individual and social behavior.

Mead’s ideas flourished in sociology departments and graduate programs and symbolic interaction became a major theory within the discipline of sociology with interactionists serving as critics of the more behavioristic approaches in the discipline. In other disciplines – notably engineering and information systems – cybernetics and control theory were being developed. In 1943 Rosenbleuth, Wiener, and Bigelow published their paper, “Behavior, Purpose, and Teleology,” outlining the principles of a cybernetic theory of human behavior. Wiener’s book, Cybernetics, published in 1948, further expanded the principles of self-regulating systems into the biological and behavioral sciences. As these ideas developed, and believing a major new approach to science was afoot, a series of influential conferences were held – from 1944 to 1956 – on Circular Causal and Feedback Mechanisms in Biological and Social Systems. Sponsored by the Josiah Macy, Jr. Foundation these conferences were attended by notables including Norbert Wiener, John von Neumann, Gregory Bateson, Ross Ashby, Don Jackson, and the young sociologist, Erving Goffman. [4] William Powers’ important work developing the principles of Perceptual Control Theory began a few years later. [5]

INTRODUCTION

The title of this paper may pose some questions to the readers of this volume. For example, “What does Erving Goffman have to do with Perceptual Control Theory or Bill Powers?” and “Who is Erving Goffman, and why should we care about him?” Goffman was, perhaps, the most well known and the most influential sociologist of the last half of the 20th Century. I have established a likely connection between Goffman and Bill Powers – maybe not the persons themselves, but their ideas. Unlike Powers, Goffman was only incidentally interested in individual behavior. Rather, like Mead he was interested in social acts constructed by people. His major concerns involved how the self and others interacted in the process of constructing and regulating the “interaction order”.

Goffman did not simply describe interaction processes. Rather, he delved into the inner workings of his actors whom he characterized as purposive, self-regulating agents. For example, when reading Goffman one becomes aware that his humans are as often guided by what they would avoid than by what they would attain (Schudson 1984). His actors purposively avoid situations that would engender embarrassment, humiliation, the loss of face, a loss of poise, an interruption in the proceedings, and a failure to complete the act. In addition, Goffman’s actors help each other through those difficult situations that may end in failure or embarrassment.

Early in his career Goffman (1959) acknowledged the significance of Mead’s ideas to his own work, but then clearly stated his intention of going beyond Mead’s discussion of individual and social action. In his early work Goffman (1959; 1963) was clearly employing the ideas of control in his work. Take, for example, his concept of impression management. Actors in the process of managing the impressions that others have of self do not/cannot know with any certainty that a specific behavior will be successful. Rather, they adjust their behavior based on the perceptual input of the responses of others to their initial behaviors. Impression management is accomplished by controlling perceptual input.